By Bastien Triclot, CEO of Zozio & Industry 4.0 Transformation Expert

The industrial sector is experiencing a complexity crisis. While we have invested billions in digitalization, Overall Equipment Effectiveness (OEE) is stagnating across many sectors. According to McKinsey, generative artificial intelligence could unlock up to $3.7 trillion in annual value within the manufacturing sector, but a major obstacle remains: the data is there, but it is “silent.”

Here is why we are moving from the era of collected data to the era of orchestrated intelligence.

The Data Paradox: Why Industry 4.0 Failed to Deliver on Its Promises

For a decade, the industry has followed the “data is king” mantra. We installed sensors on every controller and deployed expensive ERP and MES systems. However, Gartner’s findings are stark: 80% of industrial data is “Dark Data,” untapped and siloed.

The False Promise of the Dashboard

We have drowned managers in KPIs. But a dashboard does not make decisions; it merely records a failure.

-

The Cognitive Cost: Teams spend 70% of their time reconciling data from divergent sources (the ERP says “in stock,” the warehouse worker says “missing,” the MES says “in progress”).

-

The Dictatorship of Excel: To compensate for the inflexibility of rigid systems, the factory runs on thousands of “home-made” Excel files, creating technical debt and an immense loss of expertise when employees retire.

The real problem is not technological; it is semantic. Machines speak in protocols (OPC-UA, MQTT), systems speak in SQL tables, and humans speak in natural language. Until now, the bridge between these worlds was broken.

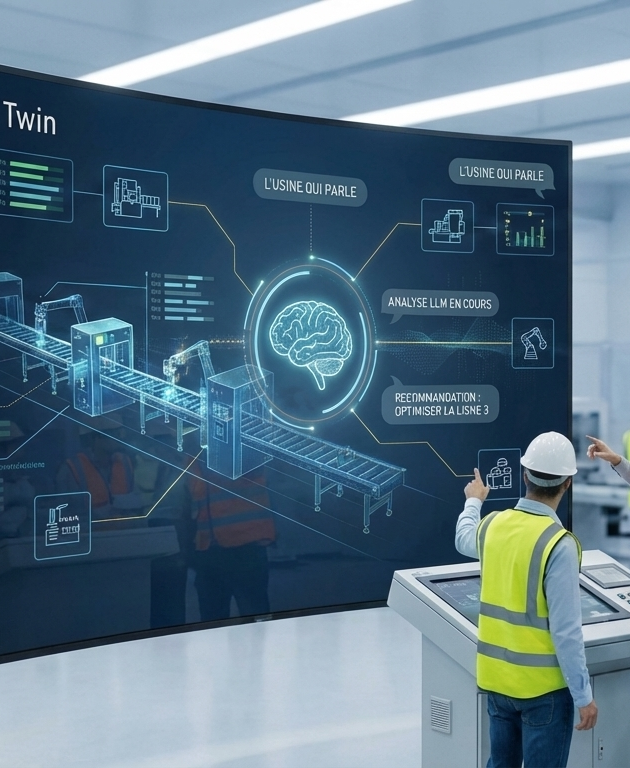

The Digital Twin: Much More Than a Model—A “Digital Thread”

For artificial intelligence to reason, it needs context. This is where the Production Digital Twin comes in. Contrary to popular belief, a high-performing digital twin is not just a 3D visualization of the factory. It is a structured data architecture that models cause-and-effect relationships.

Components of the Digital Thread

According to Deloitte, AI’s success relies on this digital continuity:

-

The Knowledge Graph: Linking a part (serial number) to its machine, the operator who produced it, the workshop temperature at that moment, and the raw material supplier.

-

Constraint Modeling: Integrating business rules (e.g., “This machine requires 2 hours of cooling after 8 hours of production”).

-

Operational Reality: Unlike a theoretical simulation, the digital twin self-corrects using real-time field data.

The LLM as a Reasoning Interface

The arrival of Large Language Models (LLM) provides the missing piece: the ability to understand and synthesize context. But beware, an LLM alone “hallucinates.” In industry, error is not an option.

The RAG Revolution (Retrieval-Augmented Generation)

The target architecture is RAG. Instead of asking the LLM to “know,” we ask it to “search” within the factory data (the digital twin) and “formulate” a reasoned response.

-

The Structured / Unstructured Bridge: The LLM is the only tool capable of simultaneously reading a SQL database (stock) and a maintenance report in PDF format (the “why” of the breakdown).

-

Democratized Access: A team leader no longer needs to master SQL or PowerBI. They simply ask: “What are the three main causes of non-compliance on Line 4 this week?” The LLM analyzes QRQC reports, compares them to machine parameters, and responds in seconds.

Knowledge Graphs

The data structure preferred by leaders (like BMW or Siemens) to power their digital twins, as it allows for the modeling of complex relationships between machines, parts, and humans. It is precisely this entanglement between different objects that made Industry 4.0 so complex.

Target Architecture: From Sensor to Reasoning

To implement this vision, a rigorous architecture is necessary. It is broken down into 5 key layers:

-

Ingestion & Governance: Cleaning data from ERP, MES, and IoT. Without clean data, the LLM will produce erroneous conclusions.

-

Industrial Data Warehouse: Historical and structured storage centered around the digital twin.

-

Vector Database: Storage of unstructured data (PDFs, emails, reports) converted into “vectors” to be understood by the AI.

-

Orchestration Layer (LLM): The brain that receives the query, chooses which agent to activate, and verifies the consistency of the responses.

-

User Interface (HMI 2.0): Mobile applications, shop floor tablets, or voice interfaces for natural interaction.

The Era of Agentic Systems: An Army of Specialized Experts

The true power emerges when the LLM no longer simply answers questions but becomes an orchestrator of autonomous agents. The Boston Consulting Group (BCG) calls this agentic AI. Imagine micro-AIs dedicated to critical missions:

The Root Cause Analysis (RCA) Agent

This agent never sleeps. As soon as a defect is detected, it scans the history of the last 5 years, machine parameters, shift changes, and quality reports. It doesn’t just give a statistic; it provides an actionable hypothesis: “The incident resembles the one from March 12, 2023, related to premature wear of bearing X.”

The Scheduling Copilot Agent

Scheduling is a mathematical nightmare. This agent dialogues with planning algorithms (APS) to test scenarios in natural language: “If I delay this VIP customer’s order until tomorrow, what is the impact on the production cost and deadlines for the 10 other orders?”

The Industrial Memory Agent

In a “War for Talent” context, AI captures the tacit knowledge of senior experts before they depart. It transforms informal exchanges and field notes into a living knowledge base, capable of training new arrivals in real time. Onboarding is accelerated, and know-how is secured.

Conclusion: The Augmented Factory, a Strategic Imperative

We are no longer at the experimentation stage. Companies that adopt the LLM as the brain of their digital twin will gain an agility that traditional systems cannot offer.

As highlighted by the World Economic Forum, Industry 5.0 puts the human back at the center. The LLM is not there to replace the operator, but to give them back their superpower: informed decision-making. The factory will no longer be a black box of data; it will finally be a dialoguable, transparent, and learning entity.